Abstract—Tongue diagnosis, one of the essential diagnostic methods of Traditional Chinese Medicine (TCM), is considered an ideal candidate for remote diagnosis methods because of its convenience and noninvasiveness. However, the trade-off between accuracy and efficiency and the variation of tongue images pose great challenges in real-time tongue image segmentation. To remedy these problems, in this paper, a light weight architecture based on the encoder-decoder structure is proposed. The tongue image feature extraction (TIFE) module is designed to generate features with larger receptive fields without sacrificing spatial resolution. The context module is used to increase the performance by aggregating multi-scale contextual information. The decoder is designed as a simple yet efficient feature upsampling module to fuse different depth features and refine the segmentation results along tongue boundaries. The loss module is proposed to deal with misclassifications causing by class imbalance. A new tongue image dataset (FDU/SHUTCM) is constructed for model training and testing, which contains ٥,٦٠٠ tongue images and their corresponding high quality masks. We demonstrate the effectiveness of the proposed model on BioHit, PolyU/HIT, and our datasets, achieving the performance of 99.15%, 95.69%,and 99.03% IoU accuracy, respectively. Segmentation of 513×513 image takes 165 ms on CPU.

Index Terms- Tongue diagnosis; Remote Diagnosis; Real-time Segmentation; Light Weight Networks; Encoder-decoder; Dilated Convolutions; Multi-scale Context Aggregation; Class Imbalance.

I. INTRODUCTION

Tongue diagnosis is one of the essential diagnostic methods of TCM. Remote diagnosis can help to achieve convenient and inexpensive medical services for people. Tongue image segmentation is a binary labeling problem aiming to separate the foreground object (tongue) from the background (non-tongue) region of a tongue image. Real-time tongue image segmentation is an indispensable step in developing remote tongue diagnosis system. Most of the prior work in this area usually assume that the tongue images are taken by TCM doctors in a well-controlled environment, and nearly ten imaging systems have been developed which possess various imaging characteristics [1]. In practice, most of the tongue images are taken by people who have not received professional guidance in a random environment. According to distinct scenarios, the existing tongue image segmentation methods can roughly be divided into two categories:

Methods for Remote Tongue Diagnosis (RTD). In capturedtongue images, the non-tongue parts usually occupy much more space. Furthermore, adverse factors, such as inconsistent exposure, background clutter, seriously affect the precision of the segmentation algorithm. Lin et al. [2] propose an end-to-end tongue image segmentation method based on ResNet. Li et al. [3] propose an end-to-end iterative tongue image matting network by decomposing the process into multiple steps, and achieved a state-of-art performance of 97.92% IoU accuracy.

Methods for Traditional Computer-aided Tongue Diagnosis (CTD). These methods [4] [5] were designed for processinghigh-quality tongue images captured by professional imaging devices under certain conditions (in a dark chest, not in open-air). However, these methods barely work when dealing with various tongue images taken in a random environment.

Generally speaking, the accuracy of the segmentation method can directly affect the tongue diagnosis result. How-ever, factors like memory and computational load during training and testing are crucial to be considered when choosing a real-time method. To sum up, it is rather difficult to implement due to three special factors:

Trade-off between accuracy and efficiency. Real-time remoteapplications aim at obtaining best accuracy under a limited computational budget, given by target platform (e.g., mobile devices with very limited computing power). Both accuracy and efficiency are important, as far as real-time tongue image segmentation methods are concerned.

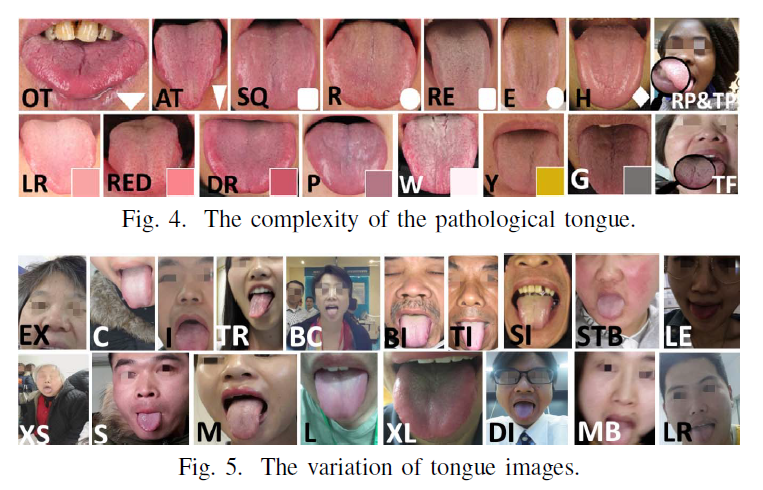

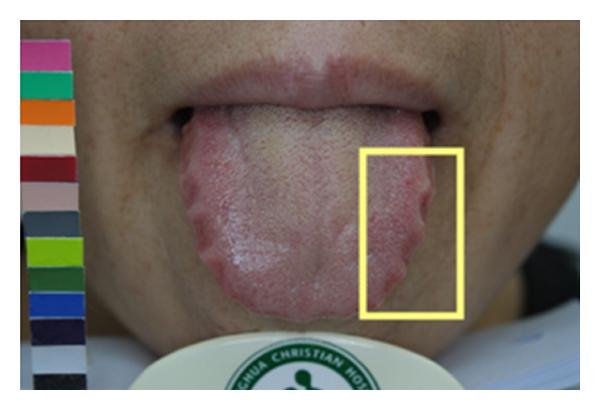

The complexity of the pathological tongue. As a non-rigidorgan, the tongue has a high degree of variability in texture, color, shape, and size. There are abundant pathological details on the surface of the tongue, such as tongue crack, red point, tooth prints, etc. These details are often with only several-pixel size. (see Fig.4)

The variation of tongue images. In addition to the tongue,obtained tongue images contain many other non-tongue com-ponents, such as lips, teeth, inner tissue of the mouth, and part of the upper body. In most of the captured tongue images, the non-tongue parts occupy much more space than the tongue, which will possibly interfere with segmentation accuracy and robustness. More seriously, the quality of the obtained tongue images considerably varies, adverse factors, such as motion blur, inconsistent exposure, different illumination, and background clutter, seriously affect the precision of the segmentation algorithm (see Fig.5).

So far, there is no single solution that can address all the problems mentioned above. In this paper, we focus on building a practically fast tongue image segmentation method with decent accuracy. The main contributions of our approach are:

- An efficient real-time architecture is proposed for pixelwise tongue image segmentation (see Fig.1).

- Our model is fast and small. The model size is 9.7 MB. Segmentation of a 513×513 image takes 165 ms on CPU.

- Our model attains a new state-of-art performance on BioHit, PolyU/HIT, and FDU/SHUTCM datasets. We also provide detailed analysis of design choices and model variants.

- We build a remote tongue image segmentation dataset (FDU/SHUTCM) and benchmark for training and testing. To the best of our knowledge, FDU/SHUTCM is the first dataset that evaluates the real-time remote tongue image segmentation performances. We will publicize this dataset, and hope it will attract more researchers to this topic. https://github.com/FDUXilly/FDU-TCMSU

II. RELATED WORK

- Image Semantic Segmentation

Deep learning has presented success in the field of computer vision, such as object detection and semantic segmentation. It is essential to know that semantic segmentation tackled by deep learning architectures is a natural step to achieve fine-grained inference, and its goal: make dense predictions

inferring labels for every pixel [6]. Currently, the Fully Convolutional Network [7] is the common forerunner of most state-of-the-art semantic segmentation techniques. Despite the accurateness and flexibility of the FCN model, there are still some limitations:

- The context information has been ignored.

- It is still far from real-time execution at high resolutions.

Real-time Segmentation: Real-time semantic segmentation methods require a fast way to generate the pixel-wise prediction under limited calculation in mobile applications. ENet [8] considers reducing the number of down sampling times and delivers high speed. ESPNet [9] performs a new spatial pyramid module to achieve real-time prediction. Differently, in this paper, our method employs a lightweight model to provide sufficient receptive field and capture adequate context information.

Multi-scale Context Aggregation: A possible way to deal with context information integration is the use of multi-scale context aggregation. PSPNet [10] and DeepLab series [12] [13] [14] combine more context information and multi-scale feature representation to obtain high-quality segmentation results.

Encoder-decoder Networks: The encoder-decoder networks have been successfully applied to many computer vision tasks. Typically, the encoder module gradually reduces the feature maps and captures higher semantic information; the decoder module gradually recovers the reduced spatial information and obtains sharp object boundaries. Unet [16] uses the skip connection. SegNet [15] utilizes the saved max

pool indices to recover spatial information.

- Light Weight Backbone Networks

Recently, many advanced applications are demand processing of data locally on various edge devices. And there has been rising interest in building efficient networks by making excellent internal structural improvements to existing networks. SqueezeNet [17] proposes a new convolution method, called the fire module, which reduces the dimension of the feature map. MobileNetV1 [18] is based on a streamlined architecture that use depthwise convolution and pointwise convolution layers to build light-weight neural networks. ShuffleNets [20] propose the pointwise group convolution using channel shuffle, improving the computational efficiency, and reducing the number of parameters. MobileNetV2 [19] introduces inverted residuals and linear bottlenecks to achieve near state-of-the-art segmentation performance.

III. METHOD

- Encoder Module

Dilated convolution for dense feature extraction and field-of-view enlargement. Dilated convolution, a powerful tool which supports the exponential expansion of the receptive field without loss of resolution.

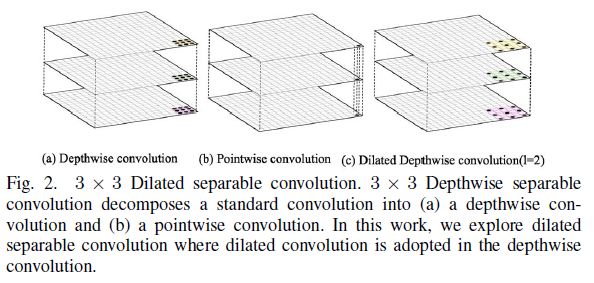

Dilated separable convolution for computational efficiency. Depthwise separable convolution, as illustrated in Fig.2(a)(b), factorizing a standard convolution into a depthwise convolution followed by a pointwise. Dilated separable convolution [14], factorizing a standard convolution into a pointwise convolution and a dilated depthwise convolution (see Fig.2(c)), reduces the computation complexity of the model while getting better performance.

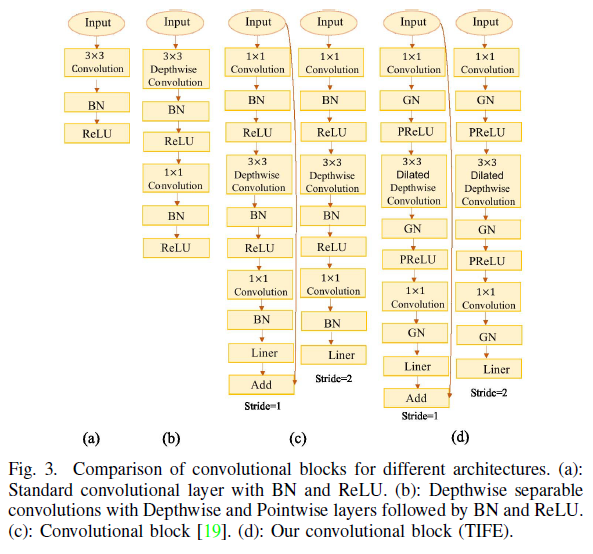

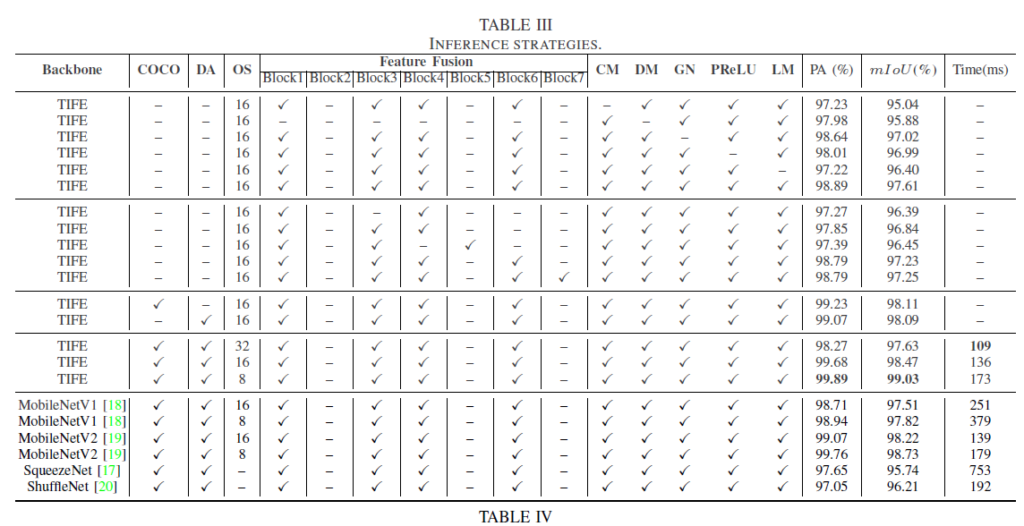

The Tongue Image Feature Extraction (TIFE) module. We propose the cascaded convolutional blocks used as tongue feature extractors (see Fig.3). We use PReLU [23] as the non-linearity, which is slightly better for image segmentation than using ReLU (see TABLE III). Group Normalization [21] is utilized during training. All layers (pointwise convolution and dilated depthwise convolution) are followed by a Group

normalization and a PReLU non-linearity except for the last point-wise convolution.

The context module: dilated spatial pyramid pooling for multi-scale context aggregation. The context module is designed to increase the performance of dense prediction architectures by aggregating multi-scale contextual information. In our work, objects (tongue) usually have very different sizes (see Fig.4, Fig.5). To handle this case, feature maps must be able to cover different scales of receptive fields, so parallel of several dilated convolutional layers with different dilated rates have been used, formally termed as ASPP [13]. ASPP consists of: one 1×1 convolution and three 3 ×3 dilated convolutions, and one global average pooling.

- Decoder Module

The role of the decoder module is to upsample the output of the encoder and fine-tune the details. In our work, the decoder is designed as an efficient feature upsampling module to fuse low-level feature maps from Block(1,3,4,6). We propose to fuse low-level features (extracted from previous layers) and

high-level features (extracted from subsequent layers) directly. The encoder features are first upsampled and then concatenated with low-level features from the cascaded convolutional blocks (see Fig.1). We apply 1×1 convolution on the fused low-level features to reduce the number of channels. Our simple yet effective decoder module refines the segmentation results (see TABLE III).

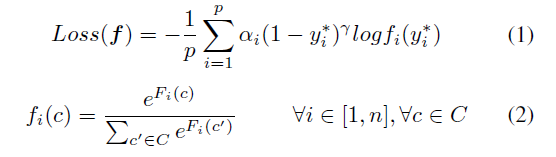

- Loss Module

In most of the remote tongue images, the number of fore-ground pixels is much smaller than the number of background pixels, resulting in the severe imbalance between positive samples and negative samples. Following focal loss [22] indset, we employ a hard-sample-aware loss (see (1)), where each pixel i of a given image has to be classified into a class c ∈ C {tongue, non-tongue}, p is the number of pixels in the image or minibatch considered, yi∗ ∈ C is the ground truth class of pixel i, (1−yi∗)γ is a modulating factor, αi is a balanced factor, fi(yi∗ ) is the probability of pixel i belong to the correct class, and f is the vector of all network outputs fi(c). And this always comes from mapping the unnormalized scores Fi(c)

through a softmax unit (see (2)).

During testing, the decision function commonly used consists in picking the class of maximum score: the predicted class for a given pixel i is yi = argmax Fi(c) c ∈ C. We observe that our proposed loss module works consistently better by handling imbalanced classes (see TABLE III).

IV. EXPERIMENTS

- Data Preparation

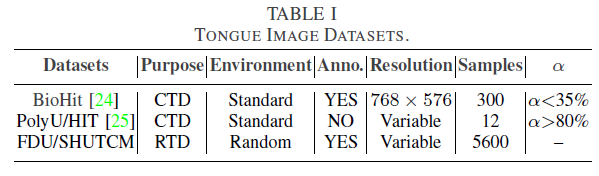

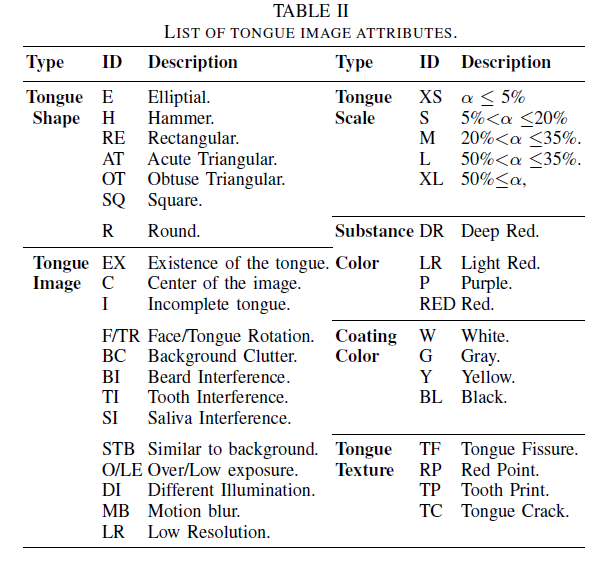

So far, there are few public standard tongue image datasets [24] [25] (see TABLE I). And images in both datasets are taken by TCM doctors in a well-controlled environment. To fill the gap, we construct a novel dataset named FDU/SHUTCM as a benchmark for real-time remote tongue image segmentation.

Data amount. We have collected 5600 tongue images in JPG image format. These images are split into the training and testing sets with 4,600 and 1,000 images, respectively. We manually labeled them with Photoshop quick selection.

Image diversity. Images in our dataset are with large structure variation for both foreground and background regions (see TABLE II, where α = area(tongue body) /area(tongue image) ). As a nonrigid organ, the tongue has a high degree of variability in size, shape, color, and texture (see Fig.4).

Data augmentation. In order to enhance the performance of the model, we exploit different rotation, reflection and resizing to increase the number of training images. Four rotation angles {-45◦,-20◦,20◦,45◦}, four scales {0.5,0.8,1.2,1.5} and four Gamma values {0.5,0.8,1.2,1.5} are used.

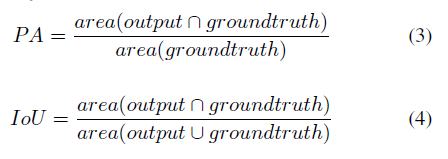

B. Performance Evaluation Metrics

Real-time semantic segmentation methods require a fast way to generate the pixel-wise prediction under limited calculation in mobile applications. Many aspects must be evaluated to assert the validity and usefulness of the method: accuracy, latency, and computational cost. The accuracy is measured by Pixel Accuracy (PA) and Interaction-over-Union (IoU) :

C. Experiment Results

We conduct the experiments on a computer with 56 CoreTM E5-2660 2.00GHz processor, 128GB of RAM, and four TITAN XP Graphics Card.

Inference strategies. We denote output stride as the ratio of input image resolution to the encoder output resolution. OS: The output stride used during training. COCO: Models pretrained on MS-COCO [28]. DA: Data augmentation. CM: Employing the proposed context module. DM: Employing the proposed decoder module. LM: Employing the proposed loss module.

In this work, we found that the TIFE module significantly reduces the computation complexity of the model while maintaining better performance (compared with [18], [19], [17], [20] ). As shown in TABLE III, employing outputstride=8 brings 0.56% improvement over using outputstride=16; adding CM, DM, GN, PReLU and LM further improve the performance by 2.57%, 1.73%, 0.59%, 0.62% and 1.21%, respectively. What’s more, concating the low-level feature maps from Block (1,3,4,6) leads to better performance. We pretrain our proposed model on MS-COCO dataset, which yields about extra 0.5% improvement. Adopting data augmentation as post processing brings another 0.48% improvement.

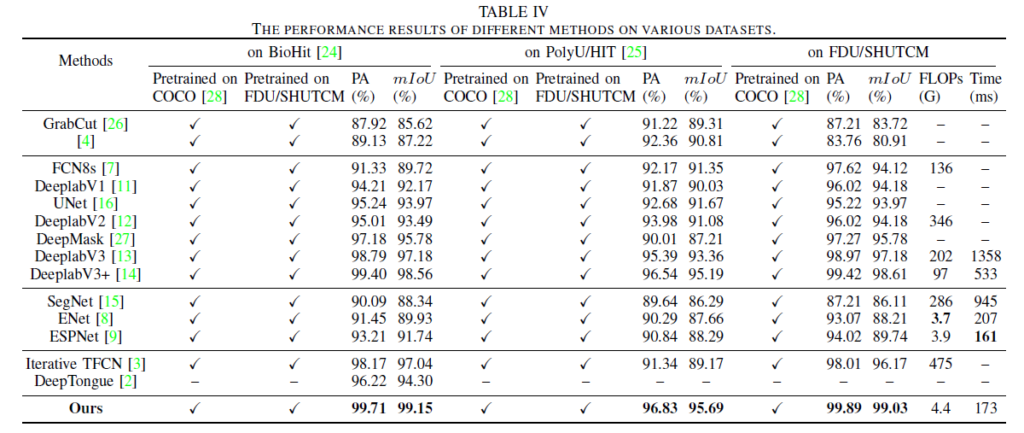

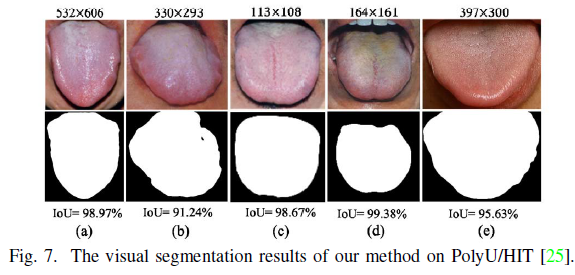

Performance on BioHit and PolyU/HIT. BioHit [24] is a public tongue image dataset which composes 300 tongues

images in BMP format. These images all have a resolution of 768 × 576. We randomly selected 100 images in BioHit

for testing. The PolyU/HIT [25] (without annotations) tongue image dataset contains 12 color images in BMP format. And the resolution of the tongue images is different.

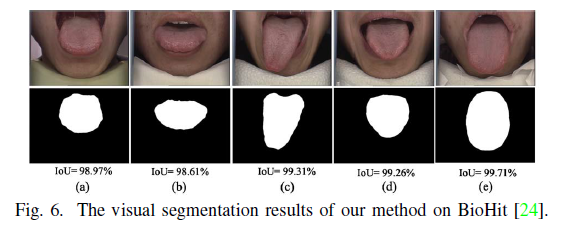

Our model is pretrained on MS-COCO and FDU/SHUTCM datasets. We evaluate the performance of our methods with representative methods, and the results show that our method can achieve 99.15% and 95.69% IoU accuracy on BioHit and PolyU/HIT dataset, better than other methods (see TABLE IV). The visual segmentation results of our method on BioHit and PolyU/HIT datasets can be seen in Fig.6 and Fig.7.

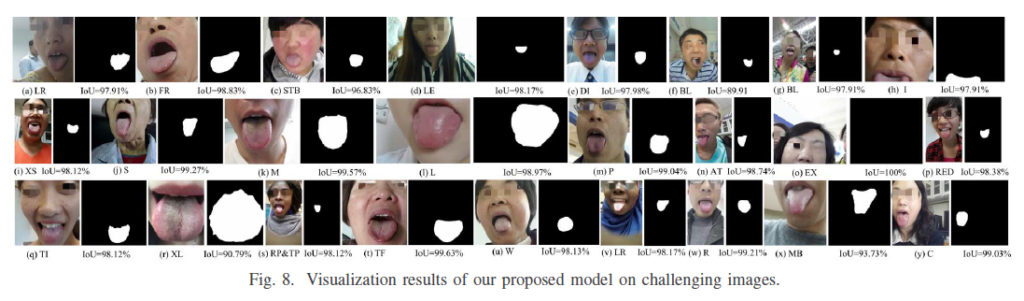

Performance on FDU/SHUTCM. We perform a thorough comparison between our model and existing state-of-the-art methods basing on labeled 1,000 testing images. TABLE IV shows the experimental results on FDU/SHUTCM testing data, which include approaches using hand-crafted features [4], [26], deep CNN features [7], [11], [16] , [12], [27], [13], [15], [14], [8], [9], [3], [2]. As can be observed, the accuracy of our

model significantly outperforms state-of-the-art methods [14] [13], while the inference speed and the computational cost are kept comparable with real-time methods [15] [8] [9]. Given the computation complexity budget of only 4.4 GFLOPs, Our method can achieve 99.03% IoU accuracy on FDU/SHUTCM

dataset, better than other methods. The model size is 9.7 MB. Segmentation of a 513×513 tongue image takes 165 ms on CPU. Furthermore, our model is robust to challenging inputs, and the visual segmentation results can be seen in Fig.8.

V. CONCLUSION

In this paper, we have proposed a novel real-time tongue image segmentation method. Our architecture is fast, small, yet still preserves segmentation accuracy. And it is robust and adaptive to many adverse factors, such as motion blur, inconsistent exposure, different illumination, and background clutter. A new tongue image dataset (FDU/SHUTCM) containing thousands of images and their corresponding high-quality mask is launched, contributing to the development of machine learning in tongue diagnosis. Finally, our experimental results show that the proposed model sets a new state-of-the-art performance

on BioHit, PolyU/HIT, and FDU/SHUTCM datasets.

ACKNOWLEDGEMENT

This work was supported by the National Natural Science Foundation of China (No. 81373555, No. 81774205), Special Fund of the Ministry of Education of China (No. 2018A11005) and Jihua Lab under Grant No.Y80311W180.

REFERENCES

[1] D. Zhang, H. Zhang, and B. Zhang. Tongue Image Analysis. Springer, 2017.

[2] Bingqian Lin, Junwei Xle, Cuihua Li, and Yanyun Qu. Deeptongue: Tongue segmentation via resnet. pages 1035–1039, 2018.

[3] Xinlei Li, Tong Yang, Yangyang Hu, Menglong Xu, Wenqiang Zhang, and Fufeng Li. Automatic tongue image matting for remote medical diagnosis. pages 561–564, 11 2017.

[4] Jingwei Guo, Yikang Yang, Qingwei Wu, Jionglong Su, and Ma Fei. Adaptive active contour model based automatic tongue image segmentation. In International Congress on Image & Signal Processing, 2017.

[5] Kebin Wu and David Zhang. Robust tongue segmentation by fusing region-based and edge-based approaches. Expert Systems With Applications, 42(21):8027–8038, 2015.

[6] Alberto Garciagarcia, Sergio Ortsescolano, Sergiu Oprea, Victor Villenamartinez, and Jose Garcia Rodriguez. A review on deep learning techniques applied to semantic segmentation. arXiv: Computer Vision and Pattern Recognition, 2017.

[7] Jonathan Long, Evan Shelhamer, and Trevor Darrell. Fully convolutional networks for semantic segmentation. computer vision and pattern recognition, pages 3431–3440, 2015.

[8] Adam Paszke, Abhishek Chaurasia, Sangpil Kim, and Eugenio Culurciello. Enet: A deep neural network architecture for real-time semantic segmentation. arXiv: Computer Vision and Pattern Recognition, 2017.

[9] Sachin Mehta, Mohammad Rastegari, Anat Caspi, Linda G Shapiro, and Hannaneh Hajishirzi. Espnet: Efficient spatial pyramid of dilated convolutions for semantic segmentation. european conference on computer vision, pages 561–580, 2018.

[10] Hengshuang Zhao, Jianping Shi, Xiaojuan Qi, Xiaogang Wang, and Jiaya Jia. Pyramid scene parsing network. computer vision and pattern recognition, pages 6230–6239, 2017.

[11] Liang Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L. Yuille. Semantic image segmentation with deep convolutional nets and fully connected crfs. Computer Science, (4):357–

361, 2014.

[12] Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L. Yuille. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs.

CoRR, abs/1606.00915, 2016.

[13] Liangchieh Chen, George Papandreou, Florian Schroff, and Hartwig Adam. Rethinking atrous convolution for semantic image segmentation. arXiv: Computer Vision and Pattern Recognition, 2017.

[14] Liangchieh Chen, Yukun Zhu, George Papandreou, Florian Schroff, and Hartwig Adam. Encoder-decoder with atrous separable convolution for semantic image segmentation. european conference on computer vision, pages 833–851, 2018.

[15] Vijay Badrinarayanan, Alex Kendall, and Roberto Cipolla. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(12):2481–2495, 2017.

[16] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing & Computer-assisted Intervention, 2015.

[17] Forrest N Iandola, Song Han, Matthew W Moskewicz, Khalid Ashraf, William J Dally, and Kurt Keutzer. Squeezenet: Alexnet-level accuracy with 50x fewer parameters and ¡0.5mb model size. arXiv: Computer

Vision and Pattern Recognition, 2017.

[18] Andrew G Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, and Hartwig Adam. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv: Computer Vision and Pattern Recognition, 2017.

[19] Mark B Sandler, Andrew G Howard, Menglong Zhu, Andrey Zhmoginov, and Liangchieh Chen. Mobilenetv2: Inverted residuals and linear bottlenecks. computer vision and pattern recognition, pages 4510–4520, 2018.

[20] Xiangyu Zhang, Xinyu Zhou, Mengxiao Lin, and Jian Sun. Shufflenet: An extremely efficient convolutional neural network for mobile devices. computer vision and pattern recognition, pages 6848–6856, 2018.

[21] Yuxin Wu and Kaiming He. Group normalization. arXiv: Computer Vision and Pattern Recognition, 2018.

[22] Tsungyi Lin, Priya Goyal, Ross B Girshick, Kaiming He, and Piotr Dollar. Focal loss for dense object detection. international conference on computer vision, pages 2999–3007, 2017.

[23] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. international conference on computer vision, pages 1026– 1034, 2015.

[24] BioHit. ”TongueImageDataset”. http://github.com/BioHit /TongueImageDataset, 2014.

[25] PolyU/HIT Tongue Database. http://www.comp.polyu.edu.hk / biometrics/.

[26] Carsten Rother. Grabcut: interactive foreground extraction using iterated graph cuts. In Acm Siggraph, 2004.

[27] Pedro O. Pinheiro, Ronan Collobert, and Piotr Dollar. Learning to segment object candidates. 2015.

[28] Tsungyi Lin, Michael Maire, Serge J Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollar, and C Lawrence Zitnick. Microsoft coco: Common objects in context. european conference on computer

vision, pages 740–755, 2014.